This post skips over an in-depth explanation of Shannon entropy and only addresses the intuition surrounding it and how it can be misleading. Googling for “information theory” should come up with plenty of options for a more thorough treatment of information theory.

In information theory, entropy is a way to measure “uncertainty” or alternatively a measure of “information”. The field of information theory itself was first formalized by Claude Shannon who’s name comes up often in the literature.

Conceptually information and uncertainty are opposing forces. More of one and you have less of the other.

Lack of information leads to uncertainty. In the absence of any information we are in total uncertainty.

In practice in any given situation where we are concerned with information begins with a well defined foundation which lays out a set of possible outcomes. Each piece of information we receive should narrow down the set of possible outcomes until we reach an unambiguously certain outcome.

If we only have one possible outcome, then we are certain of that outcome. The measure of uncertainty could be thought of as a way of measuring how far we have to go to get there.

Shannon entropy is a measure of uncertainty

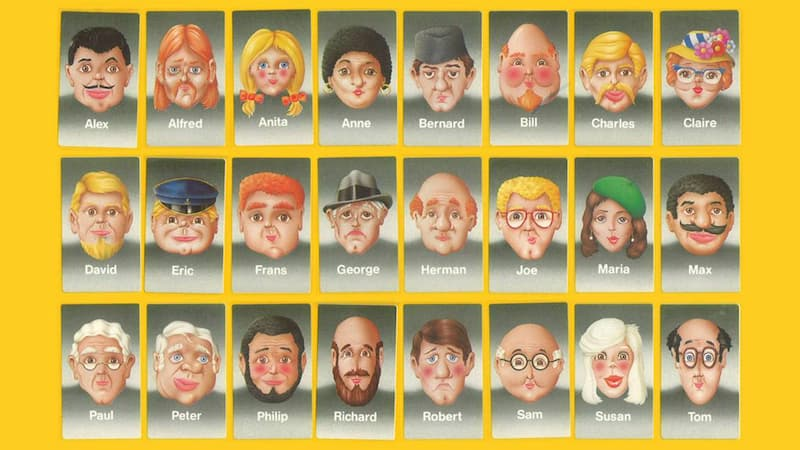

A good example of this is the classic game “Guess who”, where you have to guess a person from a set of pictures based on a few pieces of information.

We want to pinpoint a person of interest; which in this case translates to narrowing down the list of possibilities to 1. Each person has an equal probability of being the person of interest. Therefore at the beginning our uncertainty is 1 in 24 — or equivalently .

Let’s look at two possible pieces of information:

- wears glasses – matches 5 persons

- presents as a man – matches 18 persons

We started with 24 possibilities. Once we know that the person of interest is wearing glasses, we can narrow down the field to 5. On the other hand, knowing that the person presents as a man only narrows down the field to 18. The first piece of information is clearly more valuable than the second. By “valuable” we mean “reduces uncertainty”.

Entropy is a way to quantify –or measure– this valuableness.

Choices of measure for a single outcome

For the sake of discussion, let’s say the probability of a single outcome (call it ) is . And the measure of information learned from knowing this outcome is , where is some function which we will figure out here.

Adding independent pieces of information should correspond to adding entropy

Channeling some high school math, we know that if the probability of event happenning is , and the probability of event happening is , and if these two events are independent, then the probability of both happening is going to be .

For example, the event person is in the first row () has a probability of , while the event person is in the third column () has a probability of . Meanwhile, both of these happenning at the same time ( ; where you can read the as and) has a probability of . I.e.

A good measure should be intuitive. Which is to say, it should behave in ways that we expect. So for example, if we were to take two independent signals, adding them should yield a measure equal to the sum of each measured separately.

The simplest way to convert a multiplication like to a sum is to … drumroll … take the logarithm. So we could start with being something like .

More uncertainty should correspond to a larger entropy

To further intuit, an outcome with a lower probability is more surprising and hence strictly more informative than an outcome with a higher probability, we need:

No uncertainty should correspond to zero entropy

In other words,

because the outcome is going to happen with certainty.

Putting it all together

The simplest way to make entropy larger for outcomes with lower probability is to negate the logarithm. Doing so has the additional desirable effect that probabilities –which are in the range – will yield positive values.

So finally, we have the measure:

Since Claude Shannon was interested in measuring the information content of digital signals at the time, it made sense to use a more familiar base for the logarithm like instead of .

So the final equation is:

The choice of as the base has another nice feature that we can now interpret the information quantity as a number of bits. Because, for example, if we have 5 bits of information where each of the 32 values is equally likely, then any specific outcome has a probability of of occurring.

So knowing the outcome gets us amount of information; which happens to be — the number of bits we started with.

This measure of information for a single event is called the pointwise entropy, or self information. “Pointwise” because it is measuring a single outcome in the probability space.

Choice of measure for a probability space

Now that we have a measure of the information content of a single outcome, how do we measure the information content of a probability space?

For this, we take another simple formula we already know; which is the expectation. Hence we define the information content of a probability space to be the expected pointwise entropy.

This entropy of a probability space is often denoted where is the probability space (defined formally below). For simplicity we can think of as being a function of some outcome to the probability of that outcome.

The expected pointwise entropy is therefore:

Where is the set of all possible outcomes.

Formalities

A common critique is that Shannon entropy can be misleading. Let’s see why.

Let’s start with a probability space. It consists of:

-

A set of possible outcomes. Usually designated as .

For example, the set of possible person-of-interest consists of the 24 individuals who are pictured above.

Note that here we are dealing with a discrete probability space in that we have a countable set of possible outcomes. This isn’t always the case.

-

A set of subsets of possible outcomes. Usually designated as .

In our example, it could be people with moustaches, people wearing hats, people with glasses, etc. The set doesn’t need to have any semantic meaning. So any group of people from the 24 possible people is a valid subset.

Typically there would be one element in which contains all the possible outcomes. I.e. we say .

-

A probability function. Usually designated as , that when applied to an element in yields the probability of that set of outcomes occurring.

For example:

Also, when applied to (i.e. the set of all possible outcomes), it yields as one would expect since otherwise it would not be the set of all possible outcomes. I.e.:

Let be a probability space.

So, is the set of all possible subsets of , which is also written as .

… and …

is a function that maps from to the real numbers in the range 0 thru 1 inclusive.

The Shannon entropy of the probability function is given by: